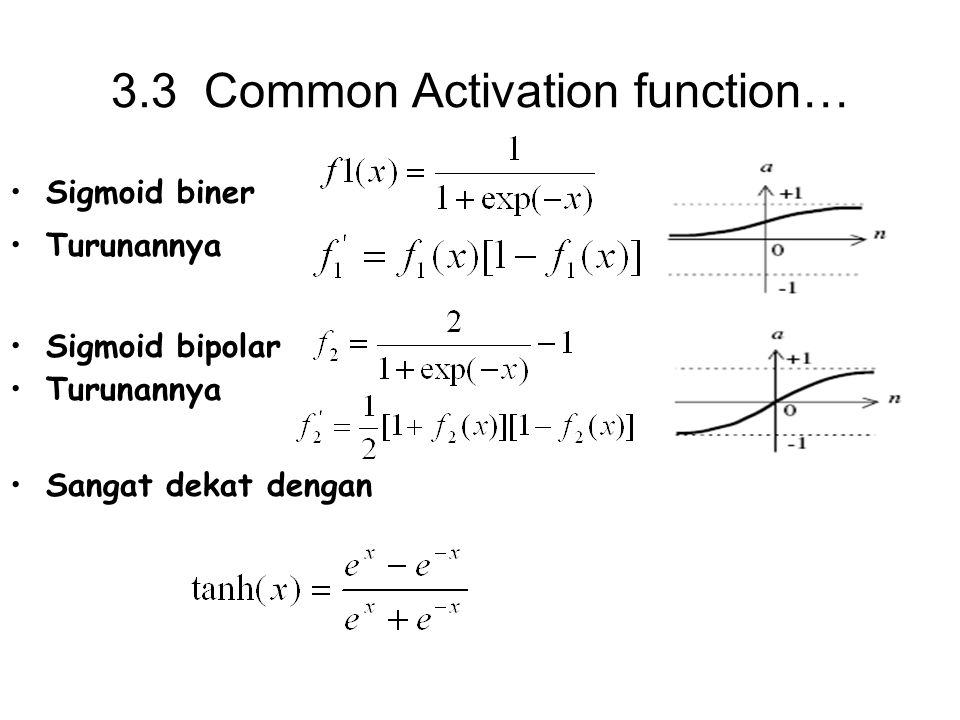

Bipolar Sigmoid Activation Function

Jun 7, 2013 - The demo program illustrates three common neural network activation functions: logistic sigmoid, hyperbolic tangent and softmax. Using the logistic sigmoid activation function for both the input-hidden and hidden-output layers, the output values are 0.6395 and 0. Download Narnia 1 Idws 400mb Dictionnaire Anglais Arabe Pdf. here. Microwave Engineering By Annapurna Das Pdf there. 6649. The same inputs, weights and bias. The most popular continuous activation function is the unipolar and bipolar sigmoid function. It says 'Neural Networks on C#'.

BipolarSigmoidFunction (Vizzini API v0.3.0) Class SUMMARY: NESTED FIELD DETAIL: FIELD org.vizzini.ai.neuralnetwork.function Class BipolarSigmoidFunction org.vizzini.ai.neuralnetwork.function.BipolarSigmoidFunction All Implemented Interfaces:, public class BipolarSigmoidFunction extends Provides a bipolar sigmoid activation function for a neural network. This function returns a value in [-1,1]. Since: v0.1 Version: v0.3 Author: Jeffrey M. Thompson See Also: Constructor Summary () Method Summary double (double x) Calculate the derivative of this function at the given input. Double (double x) Calculate the value of this function at the given input. Double () Return the maximum value this function can produce. Double () Return the minimum value this function can produce.